Foreword

Hdp hadoop installation is not a smooth success every time, inevitably will always report a lot of errors, here are some wrong solutions.

MySQL

Mysql database driver can't find error

The following command runs by default as the root user.

# yum install mysql-connector-java -y

# ambari-server setup --jdbc-db=mysql --jdbc-driver=/usr/share/java/mysql-connector-java.jar

Sqoop2

Using HDP2.5 to extract database table data to HDFS using sqoop2 will also encounter many problems. Record it here.

Start error

org.apache.sqoop.common.SqoopException: CLIENT_0004: Unable to find valid Kerberos ticket cache (kinit)

This is because of the problem set by sqoopUrl, which was originally set to:

http://master:12000/sqoop

Need to be changed to:

http://master:12000/sqoop/

Very pit, it is a slash.

Mapreduce

Start error

Caused by: java.net.URISyntaxException: Illegal character in path at index 11: /hdp/apps/${hdp.version}/mapreduce/mapreduce.tar.gz#mr-framework

This is because of the problem of mapreduce framework path setting, enter the HDP web console, click on the right

MapReduce2 -> Configs -> Advanced expands the mapred-site property setting,

Find the mapreduce.application.framework.path property and set its value to

/hdp/apps/${hdp.version}/mapreduce/mapreduce.tar.gz#mr-framework Changed to

/usr/hdp/${hdp.version}/hadoop/mapreduce.tar.gz#mr-framework Then create a corresponding path directory on HDFS, such as:

/usr/hdp/2.6.0.3-8/hadoop/

Then upload the mapreduce.tar.gz file to this directory.

Finally, you can restart the service.

If that doesn't work, try changing hdp.version to the actual version name and restarting ambari-server.

Yarn

Start sqoop2 error

Caused by: org.apache.hadoop.ipc.RemoteException(org.apache.hadoop.security.authorize.AuthorizationException): User: yarn is not allowed to impersonate lu

This is because of the permission problem after hadoop2, the locally submitted job wants the yarn agent to run, but the yarn is not allowed to proxy to the host user, so it will report this error and enter the HDP web console.

HDFS -> Configs -> Advanced

Find the Custom core-site expansion below, add the following property configuration, and then restart the service.

hadoop.proxyuser.yarn.hosts=*

hadoop.proxyuser.yarn.groups=*

Oozie

Question 1

When using Oozie to schedule sqoop, you need to pay attention to the value of jobTracker is not necessarily 8021 or 8032. The online and official documents are somewhat misleading. If you use HDP2.5, the integration is Hadoop 2.x, then jobTracker The value is actually

yarn.resourcemanager.address

The corresponding value is master: 8050 in HDP2.5 and 8032 in CDH. Master is the host name of the hadoop ResourceManager host, which can be passed in the hdp web console.

YARN -> Configs -> Advanced -> Advanced yarn-site, then expand to find

yarn.resourcemanager.address

property can be used.

Question 2

When Oozie dispatches Sqoop1, the mapreduce application can be successfully run, but the last job is killed and the error message is as follows:

Launcher ERROR, reason: Main class [org.apache.oozie.action.hadoop.SqoopMain], exit code [1]

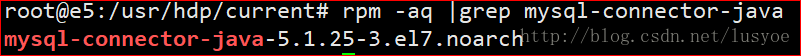

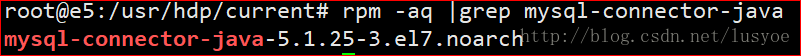

Very short, but looking for a long time is very speechless. This problem may be related to the MySQL jdbc driver version, starting with 5.1.25, as follows:

Then all replaced with 5.1.29 and then restarted the service just fine. .

The places to replace are as follows:

The middle number is the version of hdp, which can be adjusted according to different version numbers.

/usr/hdp/2.5.3.0/oozie/oozie-server/webapps/oozie/WEB-INF/lib/

/usr/hdp/2.5.3.0/oozie/libext/

/usr/hdp/2.5.3.0/oozie/share/lib/oozie/

/usr/hdp/2.5.3.0/oozie/share/lib/sqoop/

/usr/hdp/2.5.3.0/sqoop/lib/

The following two paths are the paths on the hadoop hdfs file system, and you need to upload a new jar package to the path.

Hdfs://e5:8020/user/oozie/share/lib/lib_20170412010303/oozie/

Hdfs://e5:8020/user/oozie/share/lib/lib_20170412010303/sqoop/

My jar package is downloaded via Maven~

Question 3

The schedule starts the sqop job, and the class can't find the exception. The error message is as follows:

Launcher exception: java.lang.ClassNotFoundException: Class org.apache.oozie.action.hadoop.SqoopMain not found

Here is a jar package not uploaded to hdfs above, find the following jar package:

/usr/hdp/2.5.3.0/oozie/libserver/oozie-sharelib-sqoop-4.2.0.2.6.0.3-8.jar

Then upload it to

Hdfs://e5:8020/user/oozie/share/lib/lib_20170412010303/oozie/

Then refresh sharelib or restart the service

Refresh command

# oozie admin -oozie http://e5:11000/oozie -sharelibupdate

It is recommended to upload the oozie-related sharelib jar package, which may be useful later, to avoid reporting this error again.

HBase

The cluster HBase RegionServers service starts with an error:

Question 1

Caused by: ClassNotFoundException: org.apache.hadoop.fs.FileSystem

This is because the error has been caused by adding hadoop-common.jar to the classpath of hbase.

Log in to the HDP web console and click on HBase on the left -> Configs -> Advanced

Expand Advanced hbase-env to find # Extra Java CLASSPATH elements. Optional.

Add the following below:

export HBASE_CLASSPATH=${HBASE_CLASSPATH}:/usr/hdp/current/hadoop-client/hadoop-common.jar Restart the HBase service.

Question 2

Caused by: java.lang.ClassNotFoundException: org.apache.hadoop.util.PlatformName

This problem is similar to the above, as is the lack of jar packages for the classpath.

Steps above, add hadoop-auth.jar.

export HBASE_CLASSPATH=${HBASE_CLASSPATH}:/usr/hdp/current/hadoop-client/hadoop-common.jar:/usr/hdp/current/hadoop-client/hadoop-auth.jar

Question 3

Caused by: java.io.IOException: No FileSystem for scheme: hdfs

The cause of the problem is the same as above, add hadoop-hdfs.jar to the classpath.

export HBASE_CLASSPATH=${HBASE_CLASSPATH}:/usr/hdp/current/hadoop-client/hadoop-common.jar:/usr/hdp/current/hadoop-client/hadoop-auth.jar:/usr/hdp/current/hadoop-hdfs-client/hadoop-hdfs.jar

Hue

After hdp integrates hue, oozie Dashboard opens an error:

User [hue] not defined as proxyuser

This requires Oozie to add the hue user agent,

Oozie -> Configs -> Custom oozie-site

Add the following properties to restart the Oozie service:

oozie.service.ProxyUserService.proxyuser.hue.groups=*

oozie.service.ProxyUserService.proxyuser.hue.hosts=*