Shuffle Process

shuffle concept

The original meaning of shuffle is to shuffle and shuffle, to disrupt a set of regular data as random as possible. In MapReduce, shuffle is more like the inverse process of shuffling, which refers to "shuffle" the random output of the map end according to the specified rules into data with certain rules so that the reduce end can receive and process it. Its working stage in MapReduce is from the map output to the reduce reception, which can be divided into two parts: the map end and the reduce end. Before shuffle, that is, during the map phase, MapReduce performs a split operation on the data to be processed, and assigns a MapTask task to each shard. The map () function then processes each row of data in each shard to obtain a key-value pair (key, value), where key is the offset and value is the content of a row. The key-value pairs obtained at this time are also called "intermediate results". After that, it enters the shuffle phase. It can be seen that the role of the shuffle phase is to process "intermediate results".

You should think about it here, why do you need shuffle, and what does it do?

Before understanding the specific process of shuffle, you should first understand the following two concepts:

Block (physical division)

Block is the basic storage unit in HDFS. The default size of hadoop1.x is 64M and the default size of hadoop2.x is 128M. When a file is uploaded to HDFS, the data must be divided into blocks. The division here is a physical division (the implementation mechanism is to set a read method, and each time a maximum of 128M data is read, write is called to write to hdfs). Configurable via dfs.block.size. The block uses a redundant mechanism to ensure data security: the default is 3 copies, which can be configured through dfs.replication. Note: After changing the block size configuration, the block size of the newly uploaded file is the newly configured value, and the block size of the previously uploaded file is the previous configuration value.

Split (logical partition)

The split division in Hadoop is a logical division. The purpose is only to allow the map task to better obtain data. The split is obtained by the getSplit () method in the InputFormat interface in hadoop. So how do you get the split size?

First introduce a few data volumes:

totalSize: The total size of the entire mapreduce job input file.

numSplits: from job.getNumMapTasks (), that is, the value set by the user using org.apache.hadoop.mapred.JobConf.setNumMapTasks (int n) when the job starts, from the method name, it is used to set the number of map . However, the number of final maps, that is, the number of splits, does not necessarily take the value set by the user. The number of maps set by the user only gives a hint to the final number of maps, it is only an influencing factor, not a determining factor.

GoalSize: TotalSize / numSplits, which is the expected split size, which is how much data each mapper processes. But it's just expectations.

MinSize: The minimum value of split. This value can be set in two ways:

1. Through the subclass override method protected void setMinSplitSize (long minSplitSize) to set. Normally 1, except for special cases

2. Set by mapred.min.split.size in the configuration file

Finally take the maximum of the two!

Split calculation formula: finalSplitSize = max (minSize, min (goalSize, blockSize))

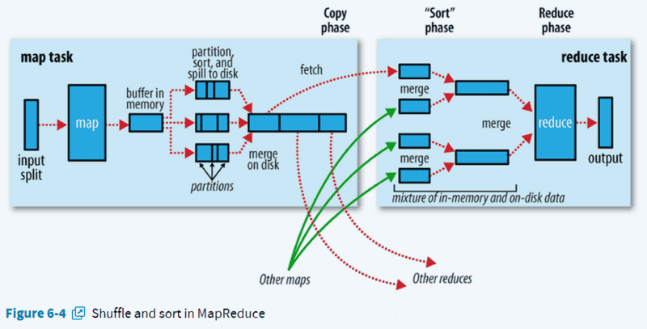

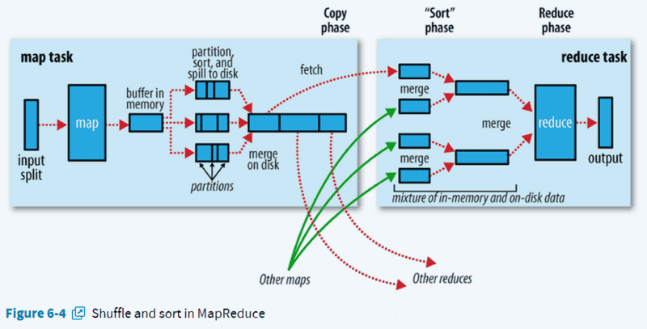

The following figure is the official flowchart of shuffle:

Shuffle Detailed Process

Map-side shuffle

① partition

② Write to the ring memory buffer

③ Perform overflow write

Sort sort ---> merge combiner ---> generate overflow write file

④Merge

1:Partition

Before writing the (key, value) pairs processed by the map () function to the buffer, you need to perform the partition operation first, so that the results of the map task processing can be sent to the specified reducer for execution, thereby achieving load balancing. To avoid data skew. MapReduce provides a default partitioning class (HashPartitioner). Its core code is as follows:

public class HashPartitioner<K, V> extends Partitioner<K, V> {

/** Use {@link Object#hashCode()} to partition. */

public int getPartition(K key, V value,

int numReduceTasks) {

return (key.hashCode() & Integer.MAX_VALUE) % numReduceTasks;

}

} The getPartition () method has three parameters, the first two refer to the key-value pairs output by the mapper task, and the third parameter refers to the number of reduce tasks set. The default value is 1. Because the remainder of any integer divided by 1 must be 0. In other words, the default return value of the getPartition () method is always 0, that is, the output of the Mapper task is always sent to the same Reducer task by default, and it can only be output to a file in the end. If you want the mapper output to be processed by multiple reducers, you only need to write a class, let it inherit the Partitioner class, and override the getPartition () method so that it returns different values for different situations. And at the end through the job settings to specify the partition class and the number of reducer tasks.

2:Write to the ring memory buffer

Because frequent disk I / O operations will seriously reduce efficiency, the "intermediate results" will not be written to disk immediately, but will be stored in the "ring memory buffer" of the map node first, and some pre-sorting will be done to improve efficiency. When the amount of data written reaches a preset threshold, an I / O operation is performed to write the data to disk. Each map task will be allocated a ring memory buffer, which is used to store the key-value pairs output by the map task (the default size is 100MB, adjusted by mapreduce.task.io.sort.mb) and the corresponding partition, which is buffered (key, value ) The pair has been serialized (for writing to disk).

3:Perform overflow write

Once the buffer content reaches the threshold (mapreduce.map.io.sort.spill.percent, default 0.80, or 80%), 80% of the memory will be locked, and the key-value pairs in each partition will be pressed. To sort, specifically sort the data according to the two keywords of partition and key. The result of the sort is that the data in the buffer are gathered together in units of partition, and the data in the same partition is ordered by key. After the sorting is completed, an overflow write file (temporary file) is created, and then a background thread is started to spill write this part of the data to a local disk as a temporary file (if the client customizes the Combiner (equivalent to map) Phase reduce), it will automatically call the combiner after partition sorting and before overflow write out, adding the value of the same key, the benefit is to reduce the amount of data written to the disk by overflow. This process is called "merging"). The remaining 20% of memory can continue to write key-value pairs output by the map during this period. The overflow write process writes the contents of the buffer to the directory specified by the mapreduce.cluster.local.dir property in a polling manner.

Combiner

If Combiner is specified, it may be called in two places:

1. When the Combiner class is set for the job, the cache overflow thread will call it when it stores the cache to disk;

2. When the number of buffer overflows exceeds mapreduce.map.combine.minspills (default 3), it will be called when the buffer overflow files are merged.

The difference between Combine and Merge:

Two key-value pairs <"a", 1> and <"a", 1>, if combined, you will get <"a", 2>, and if merged, you will get <"a", <1,1 >>

Special case: What to do when the amount of data is small and the buffer threshold cannot be reached?

For this situation, there are currently two different claims:

1:There will be no operation to write temporary files to disk, and there will be no subsequent merging.

2:Finally, it will also be stored to the local disk as a temporary file.

As for the actual situation, I don't know yet. . .

4:Merge

When the data processed by a map task is so large that it exceeds the buffer memory, multiple spill files are generated. At this point, multiple spill files generated by the same map task need to be merged to generate a final partitioned and sorted large file. The configuration property mapreduce.task.io.sort.factor controls how many streams can be merged at a time. The default value is 10. This process includes sorting and merging (optional). The merged file key-value pairs may have the same key. If the client has set the Combiner, the key-value pairs of the same key value will also be merged (according to the above mentioned) The timing of the combine call is known).

Reduce shuffle

① copy ② merge merge ③reduce

1:copy

The Reduce process starts some data copy threads and requests the NodeManager where the MapTask is located to obtain the output file via HTTP. Zh

NodeManager needs to run reduce tasks for partitioned files. And the reduce task needs the map output of several map tasks on the cluster as its special partition file. The completion time of each map task may be different, so as long as one task is completed, the reduce task starts to copy its output.

The reduce task has a small number of replication threads, so it can get the map output in parallel. The default number of threads is 5, but this default value can be set through the mapreduce.reduce.shuffle.parallelcopies property.

2:merge

The data copied will be put into the memory buffer first. The buffer size here is more flexible than that on the map side. It is based on the JVM's heap size setting. Because the Reducer does not run during the Shuffle phase, most of the memory should be saved. For Shuffle.

When all the map output belonging to the reducer has been copied, multiple files will be generated on the reducer (if there is no memory buffer for the total amount of all map data being dragged, the data will only exist in memory), and then execution will start. The merge operation, that is, disk-to-disk merge, the output data of the Map is already in order. Merge performs a merge sort. The so-called sort process at the reduce side is the merge process. The sorting method used is different from the map phase because each map The transmitted data is sorted, so many sorted map output files are merged and sorted on the reduce side to merge and sort the keys. Generally Reduce is sorting while copying, that is, the two phases of copy and sort are overlapping rather than completely separated. In the end, the Reduce shuffle process will output an overall ordered data block.

3:reduce

When a reduce task completes all the copying and sorting, it will construct a corresponding Value iterator for the Key that has been sorted according to the key. At this time, grouping is used. The default grouping is based on the key. The custom is to set the grouping function class using the job.setGroupingComparatorClass () method. For the default grouping, as long as the two keys compared by this comparator are the same, they belong to the same group, their Value will be placed in a Value iterator, and the key of this iterator uses all the Keys belonging to the same group. The first Key.